The Building Cost Information Service (BCIS) is the leading provider of cost and carbon data to the UK built environment. Over 4,000 subscribing consultants, clients and contractors use BCIS products to control costs, manage budgets, mitigate risk and improve project performance. If you would like to speak with the team call us +44 0330 341 1000, email contactbcis@bcis.co.uk or fill in our demonstration form

Published: 12/11/2025

It’s now widely accepted that AI is the greatest digital change happening globally. Its use cases are expanding rapidly with AI systems cropping up in everything from manufacturing to agriculture.

Is there, however, a place for AI in the world of construction cost management? BCIS executive director, James Fiske, and data services director, Karl Horton, discuss.

Does AI have a home in construction cost management?

AI systems are almost unavoidable and, for better or worse, have successfully muscled their way into most sectors and businesses in some shape or another.

But in the high stakes world of construction cost management, where errors in planning and forecasting can cost millions, is there a place for a technology as risky and unregulated as AI?

Insights from multiple industry sources suggest the adoption of AI systems (broadly, technology that allows computers to mimic human learning and problem-solving) and automation (machines, software or systems that undertake processes automatically) is growing across construction.

According to a BCIS poll undertaken in October 2025, nearly one-fifth of more than 300 surveyed construction professionals said their organisations are using AI or automation in cost management and/or project delivery to speed up reporting and documentation.

A further 25% said their organisations are currently exploring such systems.

While the majority of those surveyed were quantity surveyors and cost professionals, other assessments also speak to the increasing use of AI systems elsewhere in the sector.

One survey conducted by NBS revealed that 71.1% of more than 500 professionals, the majority consultants, designers or specifiers, use AI to search for technical information.

Almost two-thirds (61.8%) said they use it for data analysis and a further 57.9% for summarising documents(1).

Source: NBS – Digital Construction Report 2025. This data derives from a sample of 559 construction professionals across the UK, 64% of which provided complete answers.

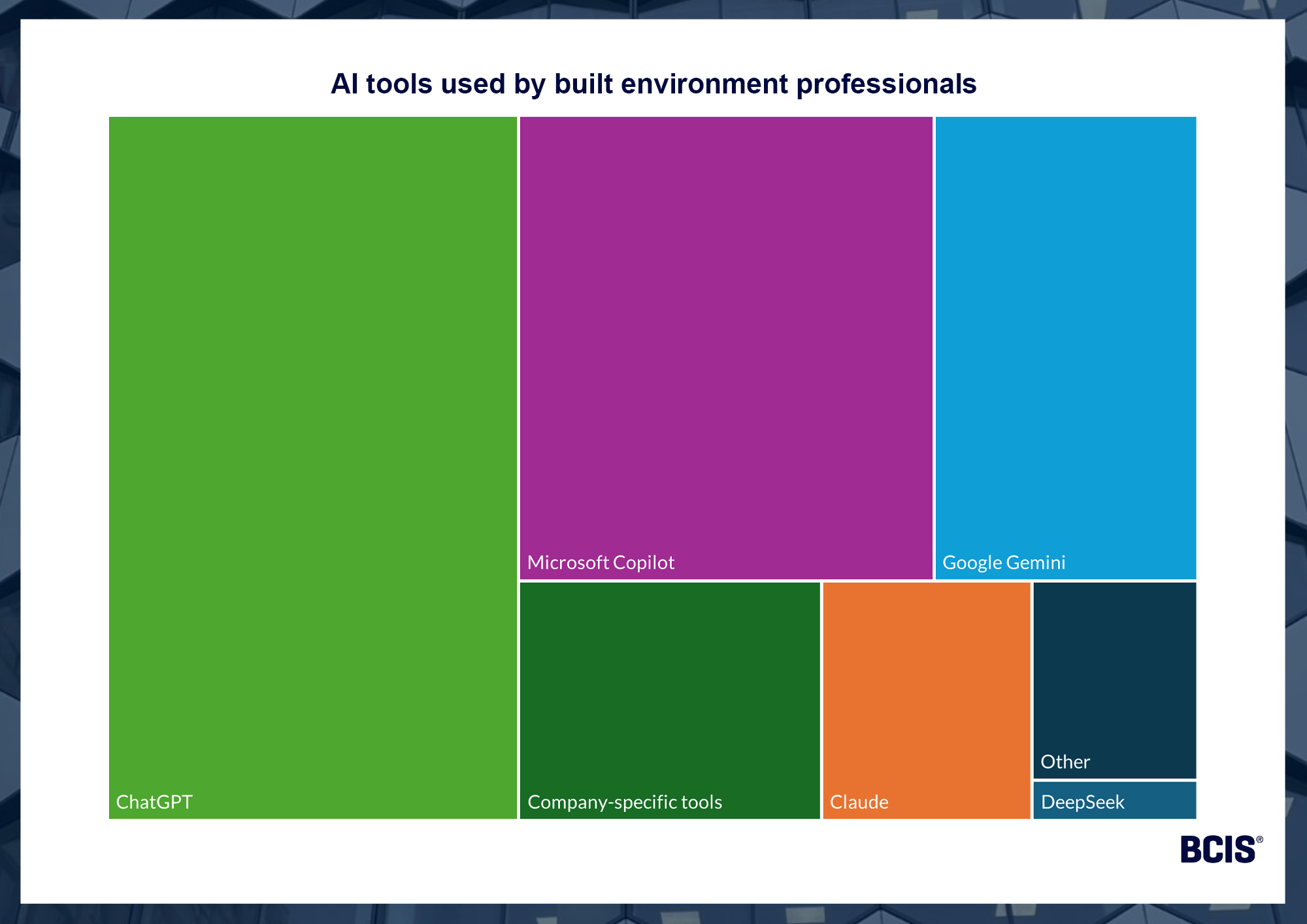

The survey also found that ChatGPT and Microsoft Copilot were the most used AI systems by the sample.

This is hardly surprising – as of July 2025, it’s speculated around 18 billion messages were being sent to ChatGPT each week by more than 700 million users(2).

A large proportion of ChatGPT use cases are personal – seeking guidance, for instance – but the latest research shows around one-quarter of messages sent are work-related and commonly request information to be obtained, documented, and interpreted, or ask ChatGPT to make decisions, give advice, solve problems, and think creatively(3).

A global Royal Institution of Chartered Surveyors (RICS) survey of more than 2,200 professionals found that in construction specifically, AI could have the most positive impact on progress monitoring, project scheduling, resource optimisation, contract reviewing and risk management(4). Cost management was ranked sixth in this list.

Construction, as much as any sector, benefits from the time (and therefore costs) saved by having a large language model (LLM) or other AI system to complete manual tasks.

The big challenge lies in verifying the quality of the outputs returned.

Almost anyone can theoretically use straightforward AI systems like ChatGPT and Copilot but inputting high-quality data and using the correct methodology to extract reliable answers must be non-negotiable in any business setting – particularly where project costing is involved.

Setting the standard

To ease surveyors into the world of AI, RICS published a professional conduct standard in September 2025(5).

This will become effective in March 2026 and is designed to ensure AI augments the surveying practice while reducing the risk of complacent or irresponsible use cases.

The aim is to push quantity surveyors up the value chain – their expertise is ever more important in the world of AI and should still be the ultimate voice of authority when extracting meaning from data and using it to inform decision-making.

The RICS standard stresses the inherent risk of error and/or bias in AI systems due to mistakes or bias in the algorithms used.

It recommends surveyors apply professional judgement (i.e. knowledge, skills, experience and professional scepticism) to assess the reliability of AI outputs, stipulating that RICS members and regulated firms must document this in writing.

Written decisions should cover relevant assumptions made, key areas of concern regarding reliability (including the reliability of underlying datasets), the reasons for concern, if concerns can be lessened, and the impact of each concern on the reliability of the output.

The latter action should be accompanied by a statement confirming if the output can be reasonably used for its intended purpose.

RICS’s standard has arrived at a crucial time, when the urgency to use AI to leapfrog ahead of competitors on the global stage is seeping into the everyday practices of businesses across sectors.

Surveyors are not immune to this but the duty of ensuring construction projects stay within budget necessitates a stricter approach to their application of AI.

Effective use of AI systems ultimately relies on high-quality prompts (i.e. sharing accurate data and asking the right questions) and for the professional to determine how sensible the outputs provided are.

Governance and training go hand in hand with this.

AI systems are developing quickly – agentic AI (software that uses the advanced natural processing techniques of LLMs to solve complex problems) is progressing ever closer to simulating human behaviour and thought processes(6).

It’s imperative that cost professionals’ comprehension and skills keep pace with the rate of change and that human accountability is maintained. Regular updates to industry and business-level governance will support this.

Smarter AI systems will be able to present information with increasing confidence and conviction, but relying solely on these outputs for cost plans, benchmarking or valuation assessments comes with huge financial and reputational risks.

Professional indemnity insurance policies don’t automatically provide cover for errors made by AI where liability is unclear and therefore cannot be used as backup plan for overreliance on AI or misplaced judgement.

That’s not to say we shouldn’t embrace AI systems – there’s huge potential for it to solve some of the biggest cost challenges facing construction.

For example, a report from contractor Mace suggests AI has a place in breaking the cycle of late, over-budget projects and restoring principles of direction clarity, trust, the right incentives, accountability and timely decision-making in major programmes(7).

It gives an example of using AI to tackle optimism bias and improve reference class forecasting.

Optimism bias is a common source of cost headaches in construction projects, particularly in big national infrastructure schemes, the fallout of which continue to dampen investment sentiment in the UK.

Used with pragmatism and governance, it’s likely AI will be transformative for enabling construction professionals to overcome such hurdles and improve cost management outcomes.

AI is fundamentally not here to replace professional judgement – it’s here to make it stronger.

To keep up to date with the latest industry news and insights from BCIS, register for our newsletter here.

(1) NBS – Digital Construction Report 2025 – here

(2) The Information – OpenAI Hits $12 Billion in Annualized Revenue, Breaks 700 Million ChatGPT Weekly Active Users – here

(3) Open AI – How People Use ChatGPT – here

(4) RICS – RICS artificial intelligence in construction report 2025 – here

(5) RICS – RICS PROFESSIONAL STANDARD – here

(6) IBM – What are AI agents? – here

(7) Mace Group – The Future of Major Programme Delivery – here